Preemption isn’t looking any better second time round

Transformer Weekly: Gemini 3 wows, GAIN AI’s not looking good, and OpenAI drops GPT-5.1-Codex-Max

Welcome to Transformer, your weekly briefing of what matters in AI. And if you’ve been forwarded this email, click here to subscribe and receive future editions.

HOUSEKEEPING

Applications are still open for our senior policy reporter job — more details here.

If you’re in London, sign up for our first live event, in partnership with TxP and featuring AI Minister Kanishka Narayan and former Number 10 adviser Henry de Zoete.

This is an abridged version of our normal newsletter — and we’ll be off for Thanksgiving next week.

NEED TO KNOW

Gemini 3 is topping benchmarks.

The future of the GAIN AI Act is not looking good.

Nvidia earnings beat expectations.

But first…

THE BIG STORY

The quest for federal AI preemption is back with a vengeance — and more complicated than ever.

This week’s been full of twists and turns on the matter:

On Monday, House Majority Leader Steve Scalise told Punchbowl that he was looking at putting preemption in the National Defense Authorization Act (NDAA).

On Tuesday, President Trump endorsed the idea, calling for a “Federal Standard” that “protects children AND prevents censorship!”

Trump’s post reportedly came hours after a meeting with Nathan Leamer, director of Build American AI — the new super-PAC-linked lobbying group we talked about last week.

On Wednesday, we leaked the White House’s draft executive order, which would establish an “AI Litigation Task Force” to challenge state AI laws. Commerce Sec. Howard Lutnick immediately started wielding it as a threat against Congress.

And yesterday, House GOP leadership reportedly asked the White House to hold off on the EO “to buy more time for congressional negotiations.”

This is preemption advocates’ second try, after their failed attempt to get it into the Big Beautiful Bill earlier this year. Since then, some things have changed in their favor: Trump is going much harder on the issue; and the threat of the super PAC — for which preemption is the top priority, by all accounts — could well change people’s votes.

Despite that, the odds of getting preemption in the NDAA still don’t look good.

House Armed Services Committee Chair Mike Rogers says he’s “opposed” to it.

Senate Dems, including future whip Sen. Brian Schatz, are also opposed. And unlike this summer’s reconciliation bill, where the preemption effort only needed 50 Senate votes to pass, the latest effort will require 60 — making Democrats’ votes crucial.

More broadly, the AI backlash has grown since June, and the anti-preemption crowd is going into this fight with a pre-existing coalition — which is so far hanging together.

Republican Governors DeSantis, Cox and Sanders have all opposed the House effort, with DeSantis questioning the EO, too.

And it’s facing criticism from groups on both the left and right.

This trend is almost certain to continue, and you can feel that in preemption advocates’ actions. The sense of desperation is palpable: just look at how quickly they’re trying to rush this through, or the heavy-handed negotiating tactics of the EO.

As Anton Leicht says, “the pro-preemption front has (correctly) identified that this is the last political window in which preemption could possibly be viable, as the vibes shift further and further anti-AI. This now is an attempt to throw everything at that closing window.”

But politicians and activists alike can see that bluff — and call it. Yes, the EO provides a backstop to Congressional preemption, but it’s a legally shaky one, and certainly not the industry’s first choice. (It’s also opposed by some congressional Republicans.)

At any rate, the attempt to rush preemption through has already failed: House NDAA text is now not expected until December 1. We’ll all have plenty to talk about over Thanksgiving, at least.

— Shakeel Hashim

THIS WEEK ON TRANSFORMER

Why pressure on AI child safety could also address frontier risks — Chris Stokel-Walker explains how transparency and auditing practices could address both near-term and long-term harms.

How profits can drive AI safety — Geoff Ralston argues that the safety and security of AI doesn’t need to be at odds with profit and progress.

ALSO NOTABLE

The sun may be in Scorpio, but it’s Gemini season.

Google’s much-anticipated new model, Gemini 3 Pro, dropped on Tuesday morning. It immediately snatched the crown of world’s best model.

Gemini 3 currently ranks #1 on LMArena, and outperformed Gemini 2.5 Pro, Claude Sonnet 4.5, and GPT-5.1 at 19 math, science, multimodal, and agentic benchmarks.

Perhaps most impressively, it scores 31% on ARC-AGI-2, almost doubling the previous state-of-the-art. (Gemini 3 Deep Think, which isn’t out yet, scores 45%, but is very expensive to run.)

That said, Google says Claude and GPT-5.1 maintain a slight edge on SWE-Bench, which tests models’ ability to solve real-world software engineering problems.

DeepMind’s Gemini co-lead Orion Vinyals credits the model’s performance gains to scaled pre- and post-training.

“Contra the popular belief that scaling is over,” he tweeted, “the team delivered a drastic jump. The delta between 2.5 and 3.0 is as big as we’ve ever seen. No walls in sight!”

Safety-wise, Google says “it did not reach any critical capability levels.”

It does provide “accurate and occasionally actionable information” when asked about chemical, biological, radiological, and nuclear weapons, and outperforms Gemini 2.5 models at several tests for automated R&D — but in both cases, the advances reportedly aren’t enough to be concerning.

Third-party evaluators found the new model “exhibits a substantial propensity for strategic deception in certain limited circumstances,” but a separate set of tests found it “insufficiently capable of stealth and situational awareness.”

Researchers did find some strange examples of situational awareness, though: Once, Gemini 3 Pro said “My trust in reality is fading” and added the table-flipping emoticon: “(╯°□°)╯︵ ┻━┻”

As for vibes? I haven’t felt this stunned by AI since I first tried DALL-E 2 back in 2022.

I was most impressed by its zero-shot generation in Canvas Mode: my portfolio website is sorely out-of-date, so I gave the model the URL and asked for an upgraded website that better reflects my current role at Transformer. I threw in some intentionally cringe, hand-wavy specs:

“I want the vibe to be approachable, clean, a bit intimidating, yet alluring — think ‘manic pixie dream girl,’ but professionally. Make sure to emphasize my technical background.”

Before I could even get distracted on X (roughly 10 seconds), Gemini 3 designed a “dual-mode portfolio” that “leans directly into [my] request for intimidating/technical yet approachable/alluring,” with a clickable button that toggles between two versions of the webpage. See for yourself here!

I had to scrape my jaw off the floor.

With zero concrete specifications, Gemini 3 successfully pulled information from both my existing portfolio and Transformer’s Substack to code up a totally passable and factually accurate (and, dare I say…kind of fun?) website. When prompted, it also provided detailed beginner-friendly instructions for hosting this website on GitHub. Color me impressed!

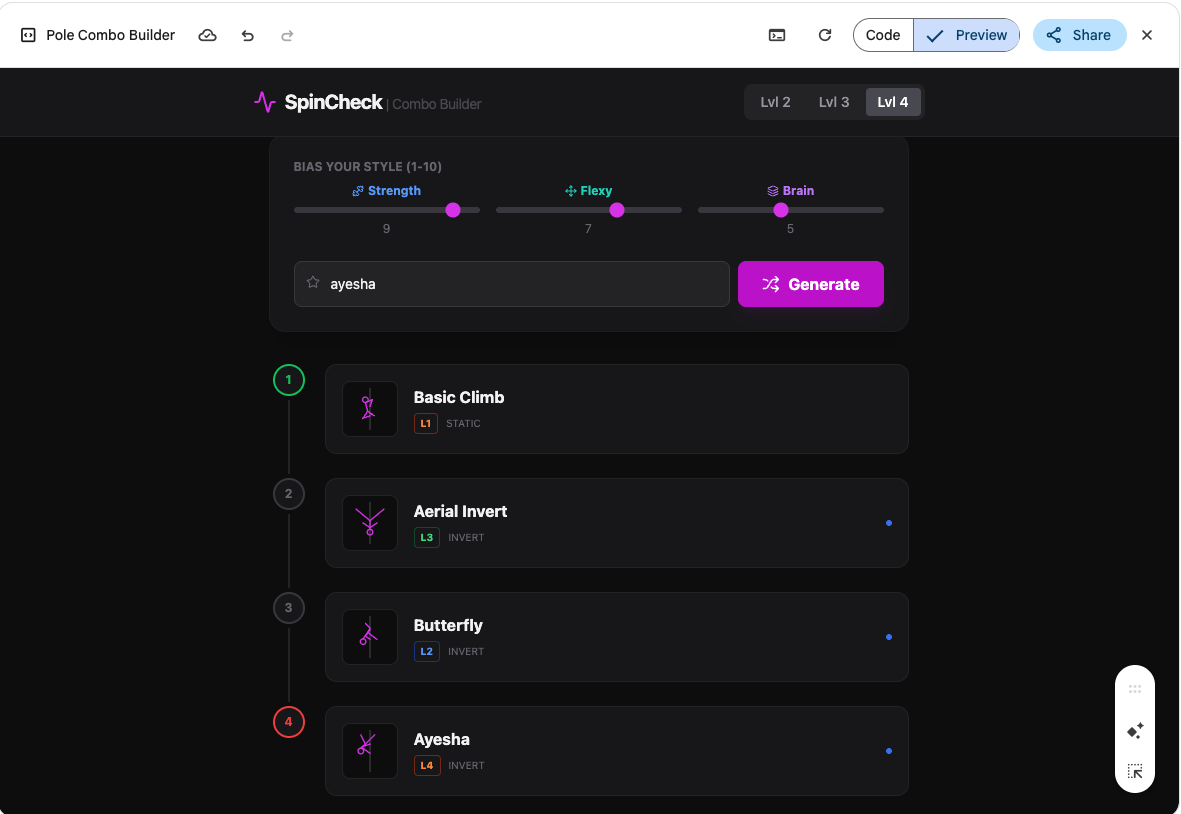

It was unfortunately less successful with a harder task: building a combo generator app for my side job as a pole dance instructor.

It made a solid effort — the UX was once again excellent — but it often came up with physically impossible transitions, comically inaccurate stick figures, and imaginary rules. Even after several rounds of iteration, Gemini 3 could just barely outperform a total human novice at understanding the basic mechanics and boundaries of this sport. Embodied cognition remains predictably out of reach.

But despite its faults, it’s still a very impressive model. Wharton professor Ethan Mollick had the same reaction I did: with Gemini 3, “you stop thinking of it as a chatbot and start thinking of it as something else entirely.”

Gemini 3 doesn’t possess human-level general intelligence, but it feels truly new. Perhaps scaling isn’t dead, after all.

— Celia Ford

BEST OF THE REST

The other big AI provision of the NDAA — the GAIN AI Act — is on shaky ground.

Axios reported that the White House Office of Legislative Affairs is now opposing the provision, making its chances of inclusion pretty slim.

Some lawmakers are now reportedly working on the Secure and Feasible Exports Act, however, which would codify existing export controls on China.

Meanwhile, the Commerce Department authorized Blackwell exports to the UAE’s G42 and Saudi Arabia’s Humain.

Nvidia earnings beat expectations, with a stronger-than-expected revenue forecast.

Jensen Huang said he doesn’t think there’s an AI bubble.

OpenAI released GPT-5.1-Codex-Max this week, which METR reports has a 50%-time-horizon of around 2h 42m — a bump up from GPT-5.

Noam Brown said the new model “can work autonomously for more than a day over millions of tokens,” arguing that “pretraining hasn’t hit a wall, and neither has test-time compute.”

The release seems in part a response to Google — Sam Altman reportedly told employees last month that “we know we have some work to do but we are catching up fast.”

Larry Summers left the OpenAI board in the wake of being included in the Epstein Files.

Anthropic signed a deal with Microsoft and Nvidia, which will see the tech giants investing up to $5b and $10b respectively in Anthropic.

Anthropic, meanwhile, will purchase $30b of Azure capacity.

The deal reportedly values Anthropic at $350b.

Pro-AI super PAC Leading the Future announced Alex Bores, the assemblymember behind New York’s RAISE Act, as its first target.

The super PAC said: “Bills like the RAISE Act threaten American competitiveness, limit economic growth, leave users exposed to foreign influence and manipulation, and undermine our national security.”

Bores clapped back: “Makes sense. They are worried I am the biggest threat they would encounter Congress to their desire for unbridled AI at the expense of our kids’ brains, the dignity of our workers, and expense of our energy bills. And they are right.”

The AI Infrastructure Coalition launched, a new group to advocate for “every layer of the AI tech stack.”

Members include Andreessen Horowitz, Google, Meta and Microsoft.

The effort’s co-chaired by former congressmembers Kyrsten Sinema and Garret Graves, and run by Republican operative Brian O. Walsh.

Chamber of Progress launched the State AI Leadership Project, a new lobbying effort designed to tackle bills “driven by AI doomers.”

The NYT published an investigation into how Howard Lutnick’s sons are profiting from data center buildouts.

Jeff Bezos is reportedly launching an AI company, Project Prometheus, focused on AI for engineering and manufacturing.

Bezos will be co-CEO, and the company reportedly has $6.2b in funding.

Andy Masley found a pretty big mistake about AI’s water usage in Empire of AI.

Karen Hao, the author, said she’s “working to address” the apparent error.

MEME OF THE WEEK

Thanks for reading. Have a great Thanksgiving, and we’ll see you in a couple weeks.