The worst (and funniest) AI takes of 2025

feat. Mark Zuckerberg, Gary Marcus, and ... ourselves.

The development of artificial intelligence may or may not be the most important event in human history. What is certain is that it has produced some of the most insufferable discourse.

In 2025, the AI world outdid itself. Timelines were revised before the ink dried. Billionaires redefined “superintelligence” to mean “smart glasses.” Everyone was wrong about AI’s water usage. And through it all, everyone kept posting (us included).

Here are 13 of the year’s worst — and funniest — takes.

AI

202720282030

AI 2027 — the bleak forecast at the center of this year’s doom discourse — was published back in April, and seemingly everyone read it, or at least saw a tweet about it (even JD Vance!). The title suggested a clear takeaway: if trends continue, AGI will exist by 2027, and it won’t look pretty. Except, uh:

Daniel Kokotajlo: “Our timelines were longer than 2027 when we published, and now they are a bit longer still.”

As OpenAI staffer Roon put it: “bro what.”

Timelines are lengthening

“Imminent AGI” is out of vogue. “Bearish AGI timelines” are in.

As White House adviser Sriram Krishnan wrote earlier this month, “timelines keep expanding.” He wasn’t the only one: everyone from Gary Marcus to Ilya Sutskever to Richard “Bitter Lesson” Sutton declared that scaling is dead and AGI isn’t coming anytime soon.

Except… as Helen Toner observed in April, a “short” timeline to advanced AI used to be on the order of decades. Yet now, even a single decade qualifies as a “long” timeline in the right company. At least many of us will be alive for the rapture the AI apocalypse, at this rate!

AI is normal…or is it?

Less than two weeks after AI 2027 dropped, Arvind Narayanan and Sayash Kapoor published “AI as Normal Technology,” a vision of AI as impactful, but like…fine. A tool, perhaps, like the internet — but not a horseman of the apocalypse.

As the debate heated up and new models underwhelmed, Narayanan and Kapoor watched people point to their paper to say, “See, AI is stupid and won’t do anything!” So, they clarified that by “normal,” they didn’t mean “trivial” — they meant “AI as Technology” (???).

And a few months later, they wrote that “in the long run, [we] expect AI could automate most cognitive tasks” and that “if strong AGI is developed and deployed in the next decade, things would not be normal at all.” So much for clarity.

Superintelligence means…AI glasses?

Mark Zuckerberg noticed that everyone started the AGI race without him, panicked, and dropped a weird manifesto about “personal superintelligence” this summer:

“Personal superintelligence that knows us deeply, understands our goals, and can help us achieve them will be by far the most useful. Personal devices like glasses that understand our context because they can see what we see, hear what we hear, and interact with us throughout the day will become our primary computing devices.”

As we wrote at the time, this is an entirely incoherent vision of what superintelligence would actually mean. Perhaps no surprise, then, that Meta continues to struggle to catch up in AI.

Gary Marcus self-identifies as a clown

When NYU professor Alfredo Canziani posted a brutal subtweet, few could guess what would happen next.

“Wild how a clown who has never trained a neural net feels entitled to criticise an expert who dedicated his life to pushing this field forward while also teaching all the non-obvious details to newcomers,” Canziani wrote.

Enter Gary Marcus: “this is defamatory and demonstrably false. you should take it down and apologize.”

You can’t make this stuff up:

Alfredo: “Who are you? 😯”

Gary: “take it down or i will sue. It is an outright lie.”

Alfredo: “Hey buddy, I have no idea who you are. Really.”

As anon account Tenonbrus wrote: “gary marcus seeing this descriptor and immediately identifying with it so strongly he decides to [pursue] legal action is some of the funniest shit i’ve ever seen.”

iS tHe bUbBle gOnNa pOp?!

Probably. How, and to what end? Unclear.

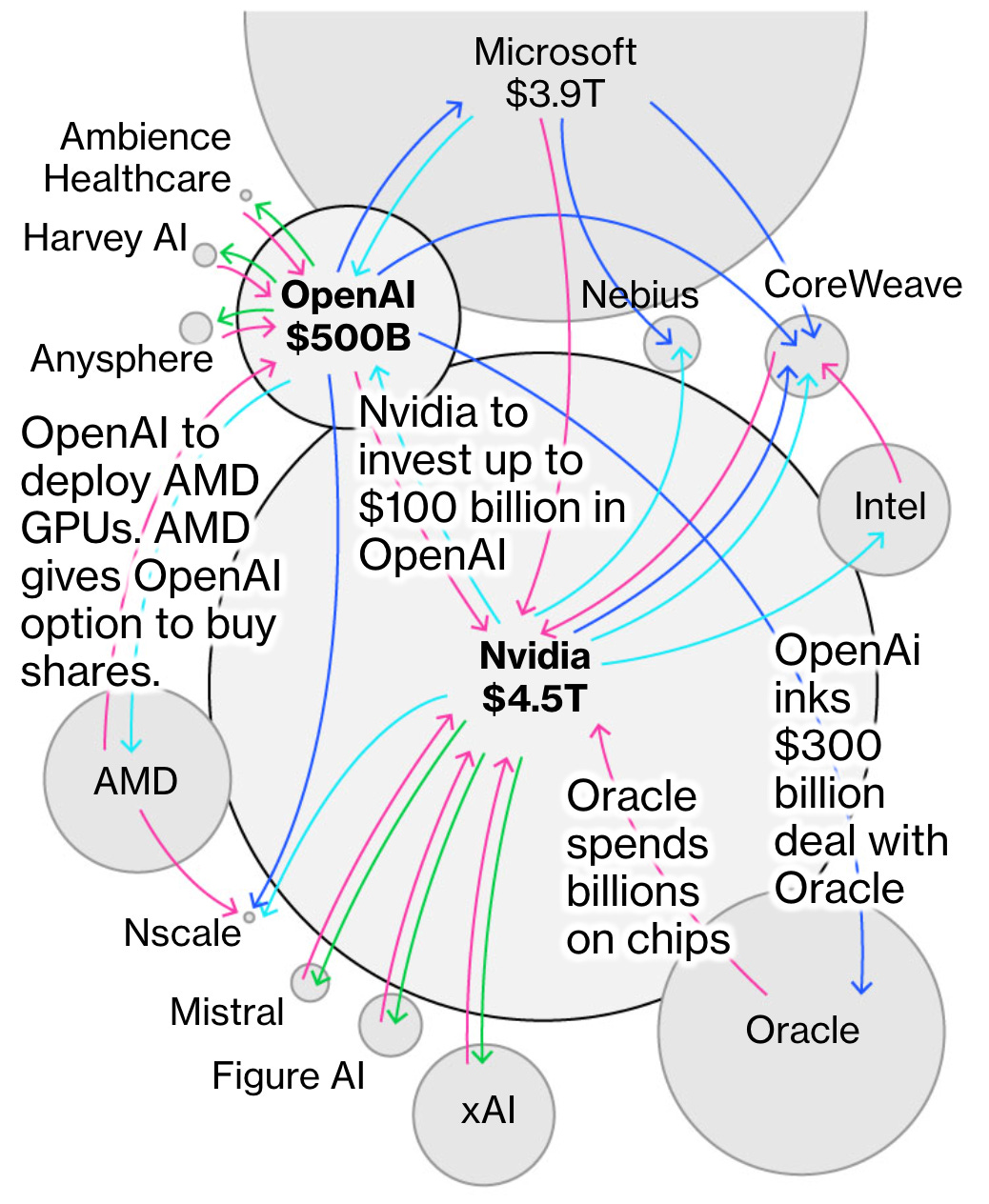

There have been countless analyses, thinkpieces, and tweets about the American economy this year, which appears to be entirely propped up by a circular AI money machine.

As one anon tweeted: “so is the u.s. economic plan really just “build the machine god”

This viral illustration certainly made it seem so:

When journalists asked whether investors are overhyping AI, even Sam Altman responded: “My opinion is yes…Someone is going to lose a phenomenal amount of money. We don’t know who.”

The data center water wars

In November, EA DC director Andy Masley shared The Mistake Heard ‘Round The World: in her book Empire of AI, Karen Hao claimed that a data center uses 1000x as much water as a city of 88,000 people…when it actually uses about 0.22x as much.

Hao responded promptly and corrected the error — but of course, the discourse didn’t stop there. Impressively, all sides of this debate were equally insufferable.

Timnit Gebru, founder of the Distributed Artificial Intelligence Research Institute, picked a fight with Masley on X (and ultimately blocked him). She then took the roast to Bluesky, where AI ethics-y folks gathered to dunk on “well, actually” EAs for correcting the narrative that data centers are supercharging a water crisis.

To be clear, many of the Well Actually Guys (though not Masley) were also extremely annoying: it’s sometimes hard to tell where good-faith error correction ends and “let’s belittle women of color” begins.

But it’s true: data centers don’t consume significantly more water than a bunch of other stuff we all do, and arguing otherwise is misleading and misguided. Not that it’ll stop news outlets from continuing to imply the opposite.

AI safety is

goodbadgood at politics

Transformer editor Shakeel Hashim, June 30, 2025: “AI safety ecosystem maybe needs to reckon harder with how it keeps losing every single major policy fight.”

Reuters, July 1, 2025: “US Senate strikes AI regulation ban from Trump megabill”

Shakeel Hashim, July 1, 2025: “While this certainly was a win for the AI safety world, I’m not convinced it’s as significant a victory as it might initially seem.”

Shakeel Hashim, December 3, 2025: “Another preemption defeat shows the AI industry is fighting a losing battle.”

(Please subscribe to Transformer for more excellent analysis.)

OpenAI goes all-in on Trump

OpenAI strayed further from its mission this year than ever before.

In February, global affairs boss Chris Lehane said: “Perhaps the biggest risk of all [in AI] is actually missing out on the economic opportunities that come from this technology.”

But the most shameless of all OpenAI’s actions was its response to the AI Action Plan, in which long-time Democrat Lehane went to great lengths to cosplay as a Republican. The vibes were awful — and irresponsible in a way we’ve now come to expect. (Oh, and they subpoenaed a bunch of non-profits, too.)

The billionaires are fighting

Sam Altman baited Elon Musk with a screenshot of his cancelled Tesla order. Elon bit.

Elon: “You stole a non-profit”

Sam: “i helped turn the thing you left for dead into what should be the largest non-profit ever.”

Automation rage bait

RL environment startup Mechanize declared: “Full automation is inevitable, whether we choose to participate or not. The only real choice is whether to hasten the inevitable, or to sit it out.”

DeepMind’s Séb Krier tweeted: “It’s wild how few of the ‘just do things’ people actually believe in agency,”

In an infuriating conversation with Hard Fork hosts Kevin Roose and Casey Newton, Mechanize co-founders Ege Erdil and Matthew Barnett had no plan for cushioning the fallout of near-term mass unemployment, and struggled to make the case that anything they’re doing is ethical.

In response to Newton asking what the government should do to prepare us for a more automated future, Erdil responded: “It is very hard for me to say what we could do today to make a world in which all jobs have been automated, or most jobs have been automated, much better.”

When Roose recommended finding some empathy for people who fear job loss, Erdil acknowledged: “It feels kind of cold for me to say that I feel empathy for you, but I just think these benefits that I’m listing are just much greater than the costs. But that’s always, I think, what it sounds like when someone’s performing some sort of utilitarian calculus.”

Schmidhuber continues to Schmidhuber

Jürgen Schmidhuber is well-known for his repeated claims that he invented everything important in artificial intelligence and everyone else has plagiarized him. Last year, he wrote a paper which accused Geoffrey Hinton and John Hopfield of winning a “Nobel Prize for plagiarism.”

But his best moment yet came just last week, when Schmidhuber commented on a picture of Hinton, Hopfield, Yann LeCun and Yoshua Bengio alongside King Charles:

“I am convinced that King Charles, for whom I have the utmost respect, did not know that 4 of the 7 2025 Queen Elizabeth awardees (Drs. Hinton, Bengio, LeCun, Hopfield) repeatedly republished key methods & ideas whose creators they failed to credit, not even in later surveys.”

Economics professor Ben Golub quote-tweeted: “Finally, a good use for ceremonial monarchs: Being constitutionally obligated to read tweets like this.”

The Antichrist did not have sex with anyone under the age of 18

In a series of secretive lectures this fall, Peter Thiel finally revealed the identity of the Antichrist: none other than If Anyone Builds It, Everyone Dies co-author Eliezer Yudkowsky.

He probably isn’t. But Yudkowsky didn’t do himself any favors when, a month later, he posted a 2,143-word tweet which, 2,004 words in, includes the line:

“To the best of my knowledge, I have never in my life had sex with anyone under the age of 18.”

What did we miss?

AI moves fast, and is being developed by some of the most exhausting people on earth — of course, we couldn’t include everything. Did we miss your favorite hot take, beef, or rage bait? Let us know.

This piece really made me laugh, you always nail these AI takes so well.

Disturbing to end the year knowing I've already read every single one of these tweets. The AI policy self-own was quite funny, I salute you guys for taking accountability for your predictions. Good stuff :D