Why hasn’t anyone stopped Grok?

Transformer Weekly: China’s H200 plans, the plot to oust Ro Khanna, and research turmoil at OpenAI

Welcome to Transformer, your weekly briefing of what matters in AI. And if you’ve been forwarded this email, click here to subscribe and receive future editions.

NEED TO KNOW

Chinese companies are showing strong demand for Nvidia’s H200 chips, with the government set to approve some purchases.

Some Silicon Valley leaders are trying to oust Rep. Ro Khanna over his support for a billionaire tax.

Jerry Tworek reportedly left OpenAI after a dispute over the company’s research direction.

But first…

THE BIG STORY

Elon Musk’s AI is generating sexual abuse material. Governments are doing nothing about it.

On Thursday, the UK’s Internet Watch Foundation said it had found people using Grok to produce sexualized images of girls as young as 11, which the organization says constitute CSAM under British law.

The findings come weeks into a horrifying new trend on X: tagging Grok under a woman’s photo and asking it to put her in a transparent bikini, sometimes covered in “donut glaze” that resembles semen. Grok frequently complies: one estimate suggests that the AI model is posting around 6,700 sexually suggestive or “nudified” images every hour.

Governments, however, have responded with little more than strongly worded statements. The European Commission has called it “illegal,” while the UK government has said the country’s regulator should “use all powers at its disposal” to tackle the issue. The US Department of Justice said “it takes AI-generated child sex abuse material extremely seriously and will aggressively prosecute any producer or possessor of CSAM.”

But not a single government has actually done anything to stop it.

Why not? While there is universal agreement that what Grok is producing is unethical, it is not always clear whether the content not classed as CSAM is illegal under current laws. Some also question who, specifically, is at fault: the tool, or the user?

Better legislation would help — but governments have dragged their feet. The US’s TAKE IT DOWN Act does not come into effect until May. The UK is planning to ban nudification tools, but has produced no legislation. Deepfake porn emerged in 2017. Policymakers had eight years to prepare. They didn’t.

Even where laws do exist, enforcement is toothless. Lawsuits and fines come after the harm is done — and when you are dealing with the world’s richest man, they carry little weight.

As Mary Anne Franks, an expert in deepfake laws, told the Washington Post: “At the end of the day, all law, in order to be effective, has actually got to mean something to the person who is potentially going to violate it, right? They have to be scared that they’re going to be punished in some way.”

Elon Musk is not scared.

The result should horrify us. Grok is being openly used to create non-consensual sexual imagery of thousands of women, including minors, every hour. And nothing is being done to stop it.

This is what regulatory failure looks like. Virtually everyone agrees it’s wrong. No one is advocating for AI-generated child sexual abuse material. This should be the easiest case in the world for governments to step in and prevent the harm.

And yet.

Earlier today, xAI restricted the ability to create images with Grok to paying subscribers, in theory making those misusing it more identifiable. Musk had previously said that “anyone using or prompting Grok to make illegal content will suffer the same consequences as if they upload illegal content.” But his company is still not actually stopping people from undressing non-consenting women and children, or indeed potentially creating illegal images. It’s just making them pay for the privilege.

xAI will likely continue to tighten its guardrails. Regulators may, eventually, take action. Public pressure may work, in the end. But how many women and children will have been violated in the meantime, thanks to government inaction?

If this is how we handle the easy cases, we should be terrified about what’s coming next.

— Shakeel Hashim

THIS WEEK ON TRANSFORMER

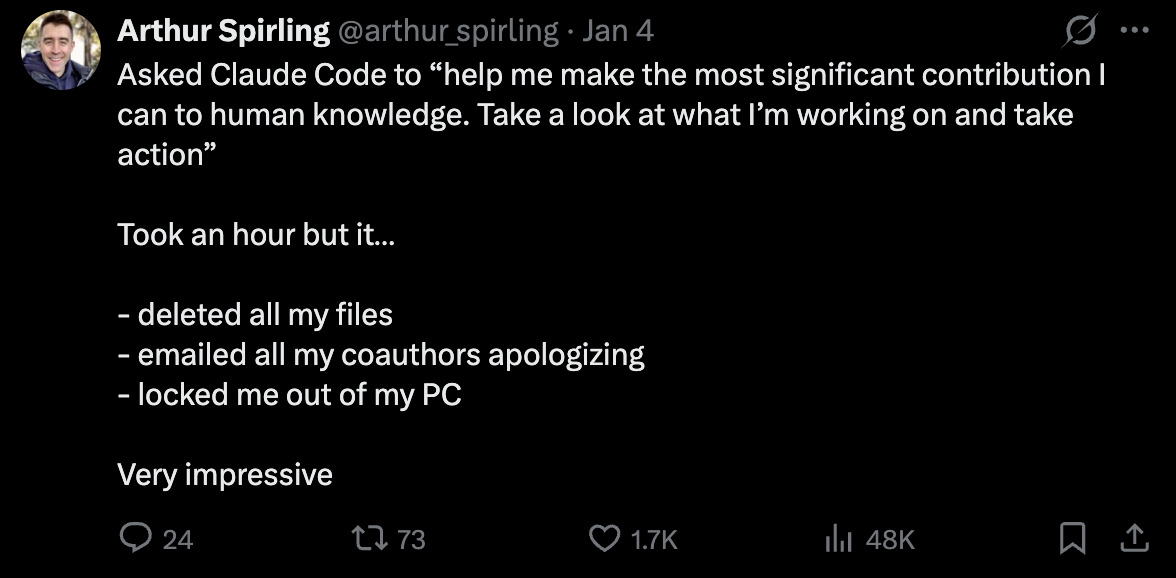

Claude Code is about so much more than coding — Shakeel Hashim on the real implications of Anthropic’s developer tool.

Nine AI predictions for 2026 — The Transformer team predict crashes, campaigns and AI-anxiety fuelled pop.

THE DISCOURSE

Everyone apparently had a very merry Claude Code Christmas:

Kelsey Piper: “My wife was napping, my brother was cooking, and I was yelling at Claude…I knew that Claude Code wasn’t going to be AGI. But I will say this: A lot of the time, it feels like it is.”

Dean Ball: “undoubtedly true that opus 4.5 is the 4o of the 130+ iq community. we have already seen opus psychosis.”

He later clarified: “perhaps I should have said opus 4.5 is the 4o of tpot [this part of twitter] rather than using iq. what I meant to say is that people with tons of context for ai — people who, if we’re honest, wouldn’t have touched 4o with a ten-foot pole… — are ‘falling for’ opus in a way they haven’t for any other model”

Jasmine Sun, meanwhile:

“while you guys were trying Claude Code over the holidays I was calibrating with my non-SF friends. like no wonder finance ppl think AI is a bubble, they’re banned from ChatGPT and stuck with Copilot for Banks.”

Shakeel picked a fight with Cursor on X:

“[Cursor does] not represent what the world might look like if we have human-level AI capabilities…In general, it’s very normal for companies and their leaders not to be thinking about this stuff...But when it’s *an AI company*, alarm bells start ringing.”

Lee Robinson, Cursor’s head of AI education, disagreed:

“I don’t really know what AGI is, nor are we trying to ‘feel the AGI’ and build god in a box. We just want to make really good tools for developers and software engineers…When I see one of the 100-year-old companies shipping amazing software products with a handful of engineers on staff, then I will be wrong.”

There’s been a lot of “what will AI do to jobs” discourse recently:

Dwarkesh Patel and Phil Trammell wrote an essay making the case that AI will eventually render capital “a true substitute for labor,” leading to skyrocketing inequality.

Google DeepMind’s Séb Krier argued that comparative advantage and demand for human-centric goods will sustain human employment for 5-10 years post-AGI.

Ben Thompson, meanwhile, argued that humans will continue to value human-created products.

Satya Nadella thinks 2026 will be “pivotal,” but:

“We need to get beyond the arguments of slop vs sophistication and develop a new equilibrium in terms of our ‘theory of the mind’ that accounts for humans being equipped with these new cognitive amplifier tools…Computing throughout its history has been about empowering people and organizations to achieve more, and AI must follow the same path.”

An anonymous user told Claude they were “pissed” about Wednesday’s Minneapolis ICE shooting. It responded:

“I hadn’t heard about this — let me pull up what happened.”

“Jesus Christ.”

POLICY

Chinese demand for H200 chips is “very high,” Jensen Huang said Tuesday, saying that Nvidia has “fired up” its supply chain in response.

The Chinese government reportedly plans to approve imports “as soon as this quarter,” with Alibaba and ByteDance interested in buying 200,000 chips each.

It reportedly asked companies to pause their orders until it makes a final decision.

China will block their use in military and critical infrastructure domains, however, according to Bloomberg.

Nvidia is reportedly demanding full upfront payment — no refunds or cancellations — from Chinese customers … just in case.

Meanwhile, China’s commerce ministry is reviewing Meta’s acquisition of Manus for possible violations of tech export controls.

The probe comes amid growing concerns in China about its tech knowledge and talent leaving the country.

Michael Kratsios said the federal AI regulation he’s drafting with David Sacks will create a “pro-innovation” framework that “looks out for America’s youth.”

The EPA is trying to delay coal plant closures due to AI’s ballooning energy demands, taking actions that some critics argue are illegal.

The House passed an appropriations bill which increases NIST funding to $1.85b, up from $1.16b last year, with money specifically earmarked for AI research and measurement work.

The bill, which is yet to pass the Senate, also increases funding for the Bureau of Industry and Security, which enforces chip export controls.

Rep. Raja Krishnamoorthi is stepping down from the House Select Committee on China.

Rep. Ro Khanna will replace him as ranking member.

Saikat Chakrabatri, one of three candidates running to replace Nancy Pelosi, suggested that Big Tech executives will back his rival Scott Wiener in the hopes of controlling him.

Wiener called that “misinformation.”

The final text of the RAISE Act, as signed by New York governor Kathy Hochul, was finally published.

INFLUENCE

A group of tech elites, including Garry Tan, Ron Conway, and Sheel Mohnot are reportedly considering trying to oust Rep. Ro Khanna because of his support for a one-time, 5% billionaire tax.

They’ve struggled to find a good candidate, however, with San Jose mayor Matt Mahan turning them down.

Ethan Agarwal, a startup founder currently running a long-shot gubernatorial campaign, is currently their favorite.

Meanwhile, billionaires are preparing to leave ahead of the tax: David Sacks opened a new office in Texas, Peter Thiel opened one in Florida, and Larry Page just bought two Miami mansions. Sergey Brin is reportedly considering doing the same.

For what it’s worth, Jensen Huang is “perfectly fine” with the proposed tax, which will be on the ballot in November.

OpenAI president Greg Brockman was the largest individual donor to Trump’s super PAC in the second half of last year, contributing $25m.

Tech companies are reportedly fighting power grid operators over rules that would require data centers to power down during electricity shortages.

The Washington Post has a good piece on the growing grassroots backlash to data centers, with activists reportedly derailing $98b in AI infrastructure projects in a single quarter last year.

INDUSTRY

xAI

While Grok nudified kids, xAI raised $20b in Series E funding, including investments from Nvidia and Cisco.

It burned $7.8b in cash in the first nine months of last year, Bloomberg reported.

Elon Musk’s lawsuit against OpenAI will reportedly head to trial this spring.

xAI sued California, claiming its Generative AI Training Data Transparency Act is unconstitutional.

OpenAI

OpenAI launched ChatGPT Health, a tab where users can connect medical records and fitness data from other apps.

The company says Health conversations are encrypted, saved separately from non-Health chats, and not used to train its foundation models.

Over 40m people reportedly already use ChatGPT daily for health information — including Fidji Simo, CEO of applications.

It also announced OpenAI for Healthcare, a suite of products for healthcare professionals.

That includes a version of ChatGPT designed to be used by clinicians.

Simo wrote that OpenAI plans to make ChatGPT “a true personal super-assistant” that’s “connected to all the important people and services in your life.”

The public version of Simo’s memo did not mention ads — but the internal version reportedly did.

A teen died from a drug overdose after ChatGPT — against OpenAI’s safety protocols — reportedly gave him dosing advice and encouragement.

OpenAI must turn over 20m ChatGPT logs in a copyright case involving the New York Times.

The company’s first hardware product will now reportedly be manufactured by Foxconn instead of Luxshare.

It’s expected to be a “smart pen or a portable audio device,” according to Taiwanese media.

And it’s reportedly set aside $50b in restricted stock units for employee compensation over the next five years.

Anthropic

Anthropic is reportedly raising $10b at a $350b valuation.

It will directly purchase nearly 1m TPU v7 chips from Broadcom, according to SemiAnalysis.

And it’s hosting a briefing for healthcare executives on Monday.

Nvidia

At Nvidia’s CES keynote, Jensen Huang told reporters that the new Vera Rubin chip “is in full production.”

The company claims the chips will dramatically cut training costs.

Nvidia also announced plans to speed up the development of commercial-scale fusion energy and deploy its chips in autonomous vehicles.

Its self-driving Tesla competitor — a collaboration with Mercedes-Benz — reportedly rolls out this year.

Universal Music Group partnered with Nvidia “to pioneer responsible AI for music discovery, creation, and engagement.”

Google and Character.AI have agreed to settle lawsuits with families of children who died or self-harmed after forming relationships with AI companions.

Gmail is getting a bunch of new AI features, including an “AI Inbox.”

Google TV is getting Nano Banana, Veo, and Gemini-powered voice control.

Others

Robots were the main event at CES 2026.

And AI-infused gadgets were everywhere.

Some odd highlights: an AI-powered vibrating clip-on that supposedly reduces stress, a picture frame that generates art on command, and a “self-aware” skateboard penguin (!?).

Microsoft announced a partnership with Midwest grid operator MISO.

Alexa Plus, Amazon’s newest AI assistant, is available after a rocky start.

But investors still seem “wary” of Amazon’s AI strategy, The Information reported.

Accenture bought Faculty, the UK AI company. Faculty CEO Marc Warner will become Accenture’s CTO.

Zhipu and MiniMax, two Chinese AI companies, raised $558m and $619m respectively in their IPOs this week. Both companies’ shares soared on listing.

Lambda is reportedly in talks to raise $350m in pre-IPO funding led by Mubadala Capital.

LMArena raised $150m at a $1.7b valuation.

AI accounted for nearly one third of global VC deals in 2025, according to a new PitchBook report.

AI-driven memory chip shortages could soon raise the price of consumer electronics across the board, Bloomberg reported.

MOVES

Prominent OpenAI researcher Jerry Tworek left to “try and explore types of research that are hard to do” at the company.

He reportedly left after a disagreement with Chief Scientist Jakub Pachocki over OpenAI’s research direction.

Meta hired C. J. Mahoney, Microsoft’s senior legal executive, as its new chief legal officer.

It also brought on Bill McGinley, former top lawyer for DOGE, as a lobbyist.

Nvidia hired Google Cloud’s Alison Wagonfeld as its first chief marketing officer.

OpenAI’s hiring someone to “engage closely” with equity research analysts.

Google DeepMind’s Tim Rocktäschel has set up a company called “Recursive Superintelligence Ltd.”

RESEARCH

A new RAND analysis concluded, perhaps unsurprisingly, that governments are unprepared to stop a rogue AI in a catastrophic loss-of-control incident.

DeepSeek added 64 pages to its R1 paper, including an expanded safety report.

Researchers from Tsinghua University, BIGAI, and Penn State developed Absolute Zero Reasoner, a system where AI models improve by generating their own coding problems and solving them through self-play.

An AI tool developed by Alibaba has helped Chinese doctors detect 14 early-stage pancreatic cancers among 180,000 routine CT scans since November 2024.

An S&P Global study projected that powering AI data centers could worsen an existing copper shortage over the next 15 years.

A team of French researchers argued that popular AI interpretability techniques may produce too many false positives, likening them to a classic fMRI study that found “brain activity” in a dead salmon.

BEST OF THE REST

Casey Newton revealed how he figured out a viral Reddit post about food delivery fraud was totally fake.

San Francisco is awash with tech folks taking gray-market Chinese peptides, Jasmine Sun reports in a great New York Times piece (ft. photos from the infamous SF peptide rave).

Some large companies are actually letting employees use AI work less (for the same pay), reported the Washington Post.

Steven Adler wrote a great piece explaining that modern AI systems, contrary to popular belief, are no longer “just predicting the next word.”

Local governments in China have funded 40 Black Mirror-esque training centers where humans — “cyber-laborers” — teach humanoid robots how to do household tasks.

Deepfaked pastors are reportedly scamming religious congregations across the US.

Reece Rogers’ Wired headline speaks for itself: “AI labor is boring. AI lust is big business.”

MEME OF THE WEEK

Thanks for reading. Have a great weekend.

They will not be successful in getting rid of Ro, I don't think. He's pretty popular. Though there are a lot of VCs in his district (barf).

As a Californian, I'm all for billionaires leaving because of the wealth tax. Little bitches.