AI and synthetic DNA could be a lethal combination

Stronger gene-synthesis screening is vital to closing off AI’s ability to enable man-made pandemics

By Ben Stewart

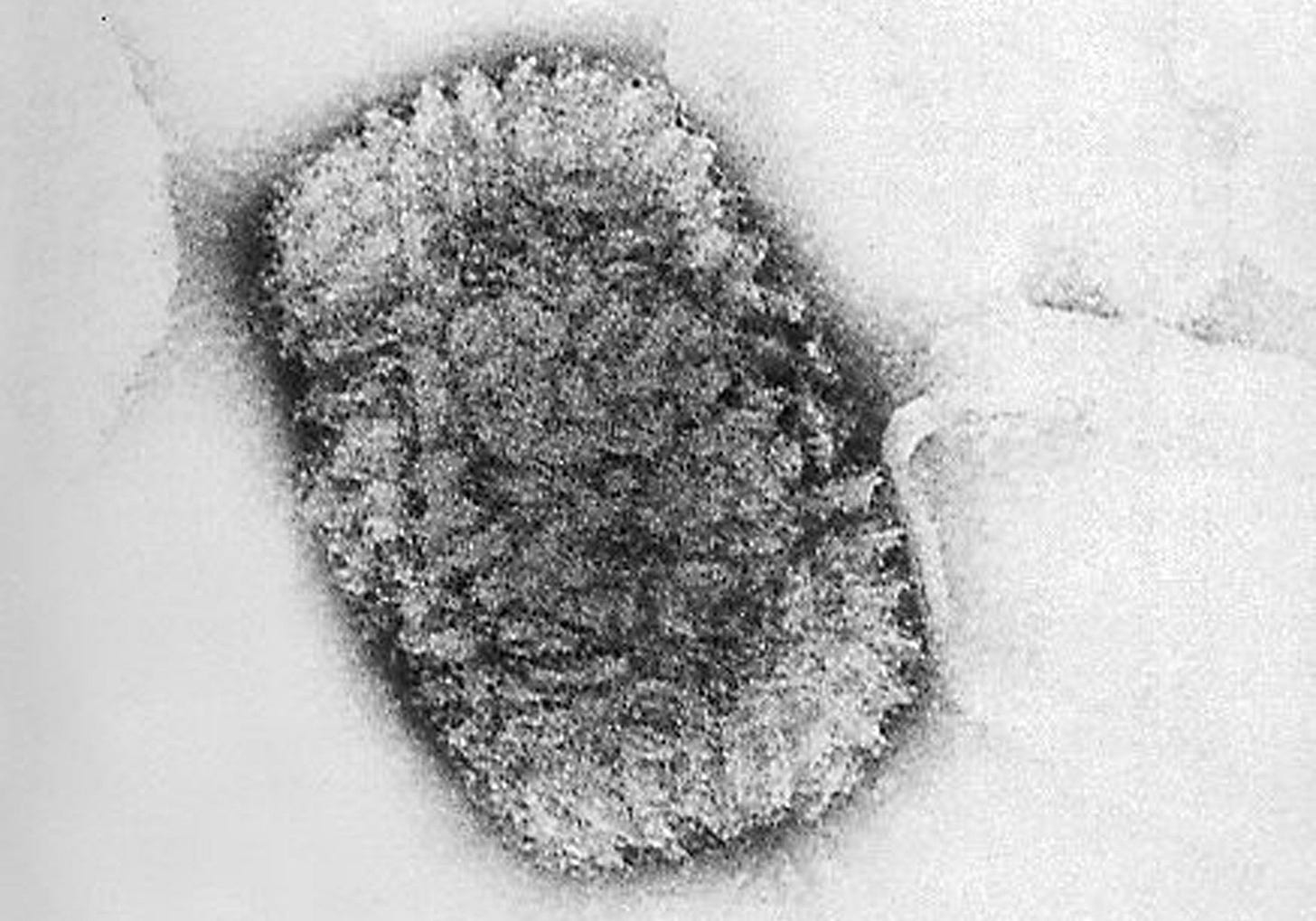

Before it was eradicated in 1980, smallpox was estimated to have killed 300 m people in the 20th century alone. To get your hands on a natural instance of smallpox today, you’d need to break into one of two highly secure sites — one in the US, the other in Russia.

But the smallpox genome — the genetic sequence that tells you how it is constructed — is freely accessible online. Scientists in 2017 were able to recreate the closely related horsepox using synthetic DNA — meaning DNA that had been made chemically from scratch, rather than drawn from any living organism. Synthetic viruses have been possible since 2002, when a team first synthesized poliovirus — which in the mid-20th century was killing or paralysing half a million people each year.

Breakthroughs in synthesis have given rise to a multi-billion dollar industry of mail-order services providing custom synthetic DNA, which has proven incredibly useful to scientists doing valuable biological research. But unlike high-security labs, none of the materials for this synthetic route are tightly controlled.

This means that in the 21st century the main barrier to accessing dangerous pathogens has been expertise. AI is now threatening to remove that barrier too.

Instead of stealing pathogens from labs or finding them in nature, anyone can place an order and get an exact sequence ‘printed’ for them. LLMs and specialized biological models are on track to make it easier for novices to use synthetic DNA to create incredibly dangerous pathogens. The combination threatens to bridge the divide between violent plans and getting hold of the actual biological material that could cause mass casualties.

The status quo

A majority of companies providing synthetic DNA recognize the risks, and are members of the International Gene Synthesis Consortium (IGSC). This group commits to two kinds of screening — checking an order placed with them to see if it could be dangerous, and checking the customer to see if they raise red flags.

But current screening has flaws. For a start, participation in the IGSC is voluntary and not all companies are signed up, and regulations are inconsistent between countries. Typical screening also throws up many false positives, which then require expensive human review.

Then there are ways round the rules. Orders could be split into sections and submitted through different synthesis companies, so no one provider can assess the danger posed by the full sequence. Screening has also depended on assessing how similar the sequence is to a list of pathogens identified as dangerous. But this approach will fail to flag less dangerous pathogens that have been modified to have enhanced pandemic potential, dangerous ones that have been modified to disguise their identity, or novel pathogens unlike any seen in nature.

And there may be ways to bypass ordering from companies entirely, as ‘benchtop synthesizers’ are being developed and sold. These enable scientists to print custom DNA within their own labs. Maintaining tamper-proof screening to restrict what these devices can be used for will be difficult.

AI’s biorisk problem

Those weaknesses in the current screening regime are becoming ever more worrying as AI becomes more competent at helping users with biology tasks. Frontier AI labs have all identified “biorisk” as one of the most concerning aspects of releasing new models to the public.

There are two main forms of AI that could be useful in creating a bioweapon. Large language models might help potential bioterrorists make plans, solve technical problems, and avoid detection — increasing the risk of deliberate pandemics. Meanwhile, biological design tools, narrow AI systems trained on biological data which predict and design biological sequences and structures, could alter dangerous sequences so they evade screening, or design pathogens that are more transmissible and deadly than those in nature.

The evidence is mixed regarding how large this threat is currently, but it is taken seriously by leading scientists and experts. Anthropic and OpenAI have recently increased safeguards for their models. These aren’t perfect, and LLMs from open-source or incautious developers will likely be published with no safeguards at all. The situation is similar for biological design tools — only 3% had safety measures in 2025. A recent investigation by RAND and the Centre for Long-Term Resilience categorized 13 tools as high-risk, requiring immediate action — but eight of them are open-source.

In addition to safeguards, we need to secure other stages between a plan and a successful attack. With AI proliferating access to biological expertise, securing DNA synthesis means closing off a key path from near-term AI capabilities to global catastrophe.

Promising progress, murky future

Fortunately, the past few years have seen significant progress in making DNA synthesis secure.

New screening tools have been developed, such as IBBIS’ Common Mechanism, Battelle’s UltraSEQ, FAST-NA, and SecureDNA’s ‘Random Adversarial Thresholds’. Each tool uses a variety of approaches to detecting potentially dangerous orders that go beyond finding similarity to a known pathogen, such as predicting the function of shorter DNA sequences, flagging ‘chimeric’ viruses pieced together from different species, or searching for mutated versions of concerning sequences. Each claims to be ‘AI-resilient’, and a recent red-teaming study suggested that screening tools could be patched to catch most sequences disguised by current AI tools.

But whether screening can be made robust against ongoing advances in AI tools is an open and troubling question. Attempts to disguise the species or function of ordered DNA will become more sophisticated, and it will become increasingly plausible to design pandemic-capable pathogens which appear less and less like familiar dangers.

For the defenders to get ahead, we need to implement new measures. We need to track the emerging capabilities of LLMs and AI-enabled design tools, and design effective safeguards that keep up. These include model unlearning, training LLM’s to refuse requests, classifier-based output filtering, staged and restricted deployment, and using AI itself to test and patch screening approaches against AI-enabled evasion and novel designs.

Other steps can improve screening, such as combining sequence screening with customer screening, incorporating order metadata, appropriate law enforcement notification, and centralized anomaly detection for split orders. Screening should also be made mandatory in law, and harmonized across jurisdictions to close loopholes.

We are not on track to do any of this effectively enough before high-risk AI models are widely available.

The need for US leadership

It’s essential for the US to drive progress on this issue given its leads in science, industry, and AI. Thanks to past efforts by many individuals, bipartisan support has grown. Executive orders from both Biden and Trump made federal funding conditional on only using services that screen orders, and both aimed to establish a Framework for Nucleic Acid Synthesis Screening. Synthesis screening was even included in the recent AI Action Plan, and AI’s role in securing biological weapons was cited in one of the few coherent sections of Trump’s recent speech to the UN.

“We’ve been heartened to see Congressional leaders from both sides of the aisle taking gene synthesis screening seriously”, said Scott T. Weathers, associate director of government affairs at the think-tank Americans for Responsible Innovation. “Even before the release of the AI Action Plan, which prioritizes strong actions to address a key national security risk, members of Congress have been highly interested in protecting the American public in a bipartisan manner.”

As well as continuing bipartisan momentum, the Action Plan also gives specific directives that could be an impetus for Congressional action. The plan reiterates the federal funding requirement, asks for better data-sharing for customer screening, and tasks the Center for AI Standards and Innovation with evaluations of the threat model. CAISI is notably under-resourced and over-burdened, so this final directive will likely be ineffective without an increase in staff, funding, and flexibility.

But the Action Plan could increase risks too. It recommends investment in “automated cloud-enabled labs” and growing the quality and quantity of biological data-sets. Both recommendations could accelerate the development of biological design tools and make it easier for novices to use them. While that could accelerate medical advances, on the current path these tools would lead to the proliferation of powerful biological capabilities around the world without safeguards.

If US investment and expertise really is vital to create biological data-sets at scale, that provides leverage — the US could choose to steward the data, accelerating progress towards high-impact medical breakthroughs without threatening national security. It could also invest in developing, maintaining and implementing screening approaches that meet the AI challenge, and engage in the much-needed diplomacy to ensure that no country provides a loophole for attackers to use unscreened synthesis processes.

While the Action Plan overall has promise, there are reasons to doubt it will lead to effective action. The Trump White House has already contradicted the plan’s focus on denying adversaries’ access to AI compute by granting a license for the sale of advanced chips to China in exchange for a cut of the revenue. Self-consistent governance is not a safe assumption.

Despite the chaos of US politics, securing DNA synthesis remains an open path for policymakers — a chance to close off a major national security threat from AI. Will they take it?

Ben Stewart is a new AI Program Officer at Longview Philanthropy, though this piece was written in a freelance capacity and doesn’t represent the opinions of Longview. Previously, Ben was a researcher on catastrophic risks at Open Philanthropy (which is also Transformer’s primary funder) and a medical doctor.

Update Oct 18: Added attribution for the Centre for Long Term Resilience to the investigation into risks from biolgical research tools.